- 19-12-2025

- Computer Vision

AI systems and humans interpret the visual world in very different ways. New research reveals that this gap explains why AI-generated images often appear overly bright, generic, and exaggerated compared with human-created visuals.

New research explores and explain why AI-generated images often feel unnatural at a closer look—even when they appear impressive at first glance. Human vision is shaped by biology. Our eyes and brain work together to perceive color, depth, movement, cultural context, and emotional cues, allowing us to interpret meaning and realism in everyday scenes.

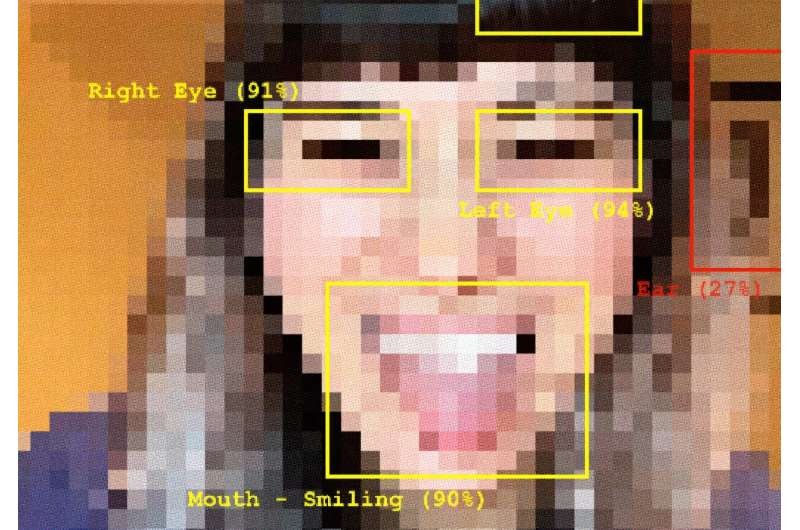

AI systems, in contrast, process images computationally. They analyze pixels, edges, textures, and patterns, comparing them with vast image libraries. Instead of understanding context the way humans do, AI relies on statistical patterns learned from training data, much of which comes from high-contrast stock imagery. When AI systems are asked to describe and recreate images, they tend to assume photorealism as a default style. They often overlook color subtleties, depth, and cultural signals, producing visuals that are boxier, more saturated, and more sensational than the original human-made images. Simple scenes may be exaggerated into dramatic, attention-grabbing visuals. These differences help explain why AI images can feel generic or emotionally hollow. While AI vision excels at rapid image labeling, categorization, and large-scale analysis, human-created images remain better at conveying authenticity, nuance, and lived experience. The takeaway is not that one form of vision is superior, but that they serve different purposes. Knowing when to rely on human perception, machine vision, or a combination of both can lead to more effective communication, safer systems, and smarter use of visual AI technologies.