- 16-01-2026

- Artificial Intelligence

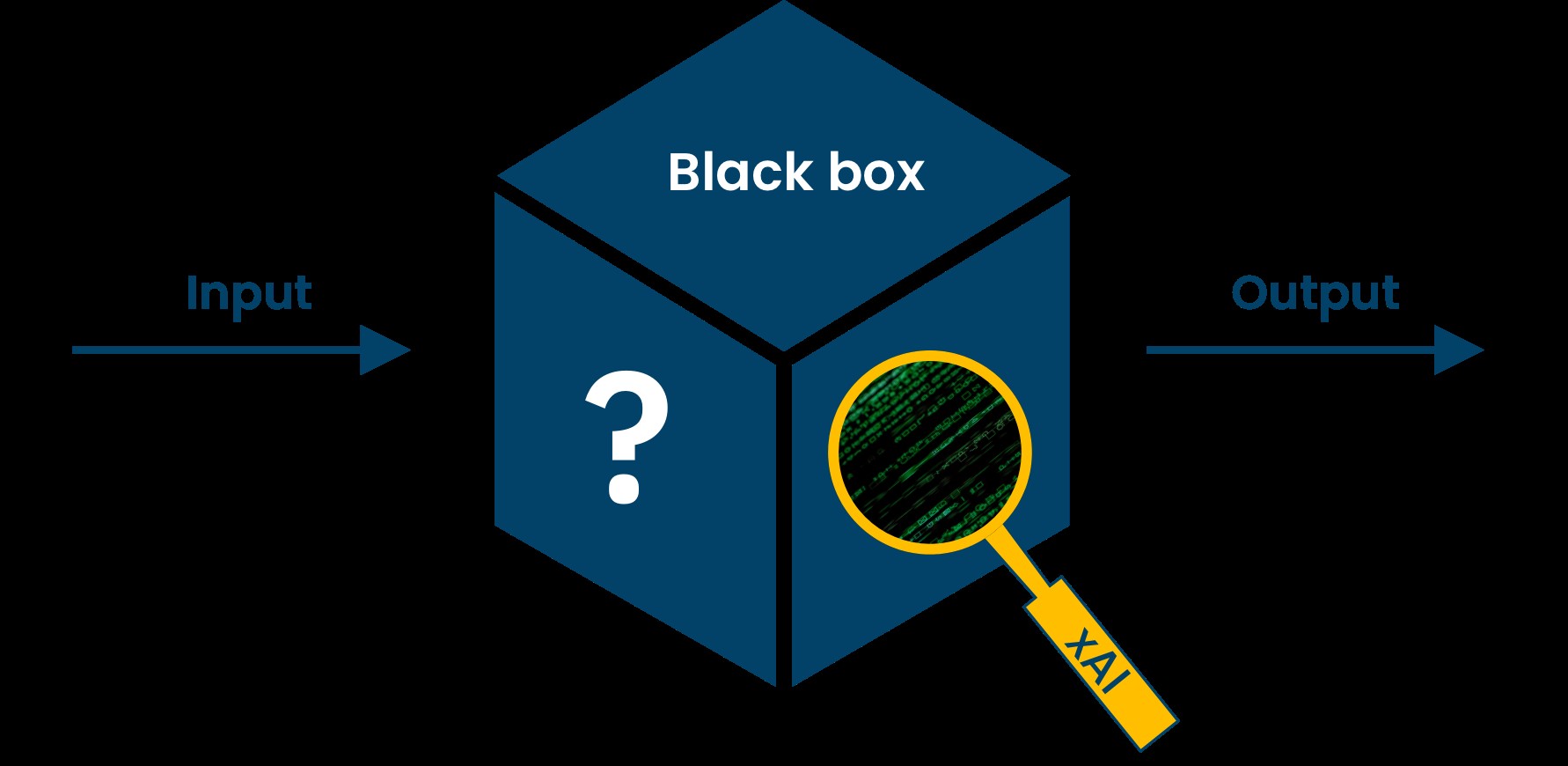

A new research transforms opaque “black-box” AI models into systems which interpreted through human-readable data. By analyzing the training data rather than only model outputs, the approach reveals information influences an AI’s predictions.

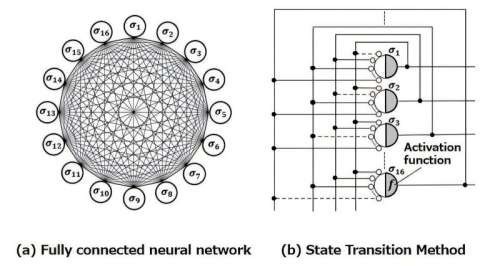

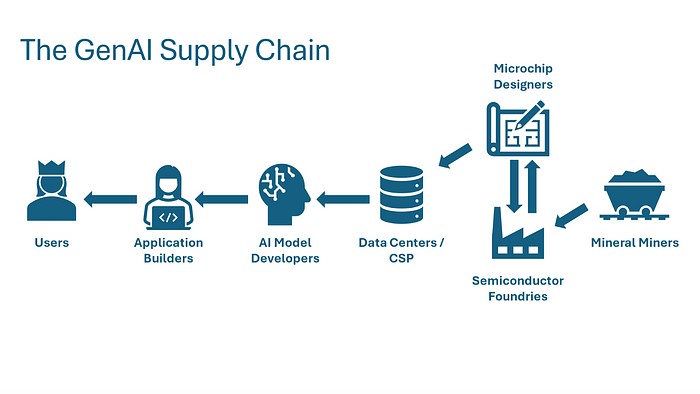

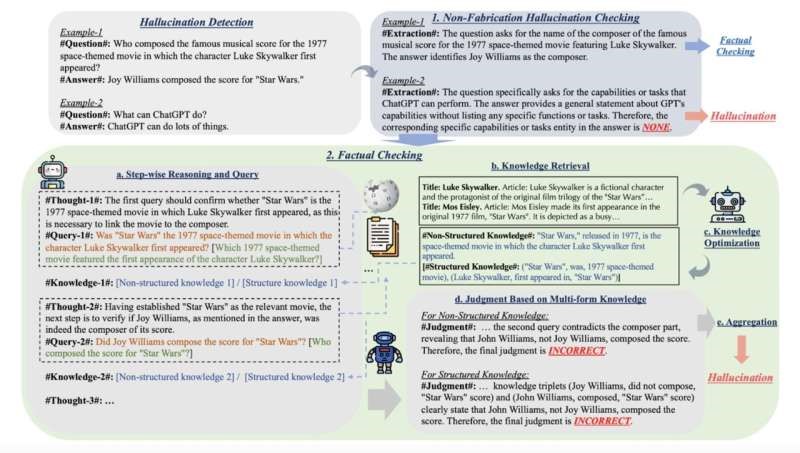

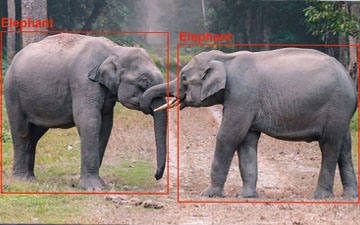

A recent study introduces a breakthrough technique designed to address one of AI’s biggest challenges: the opacity of black-box models. While deep learning systems excel at tasks like image recognition, they typically provide little insight into why they make specific decisions. This lack of transparency limits trust, accountability, and safe deployment.

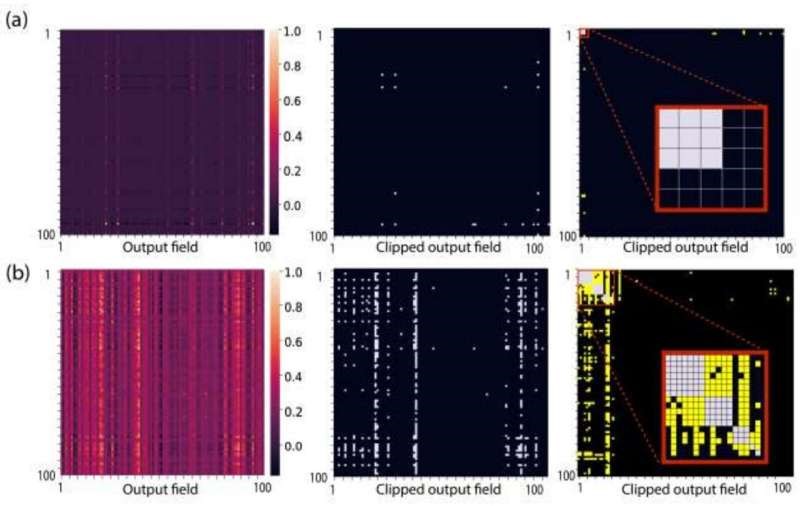

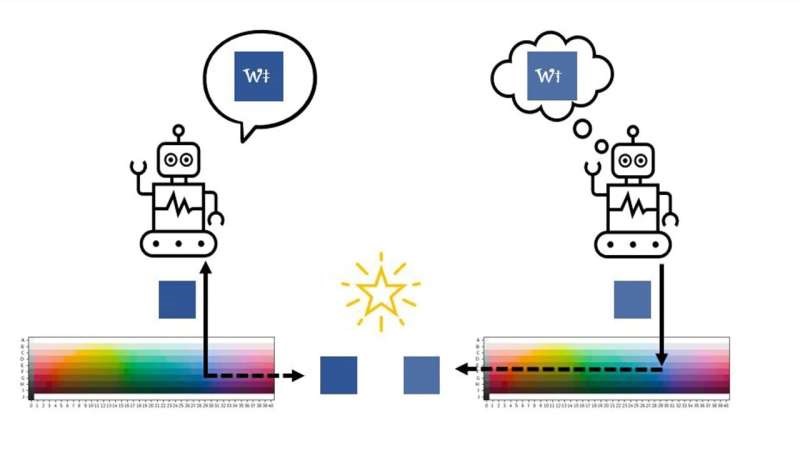

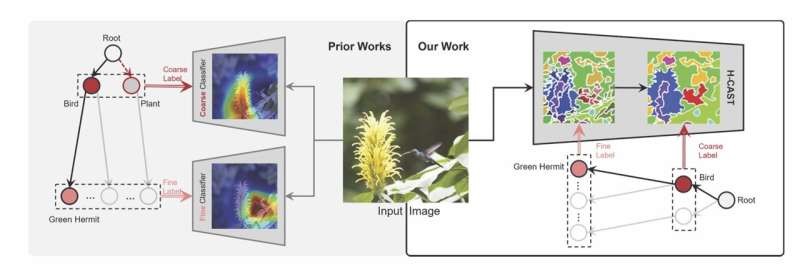

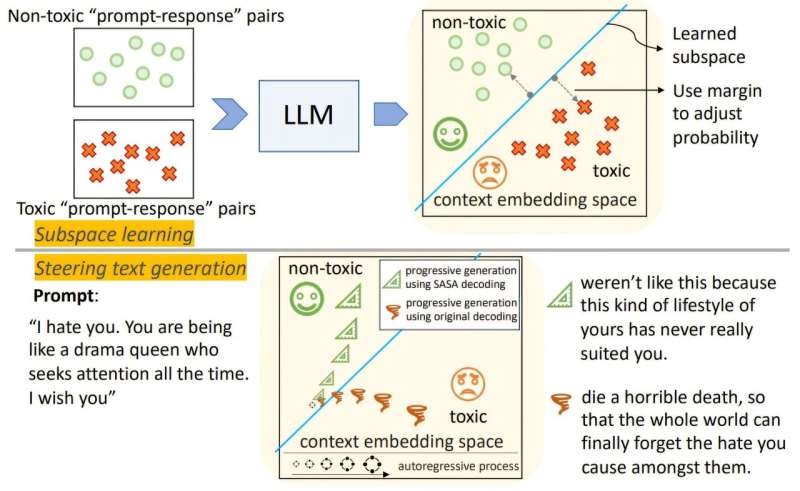

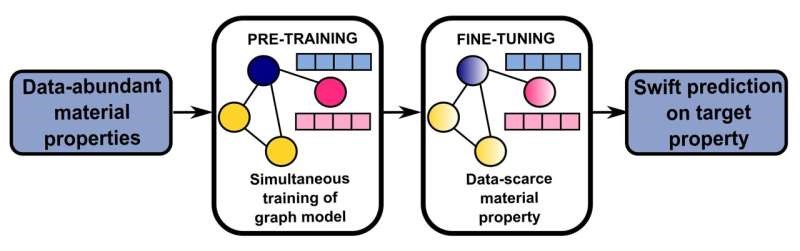

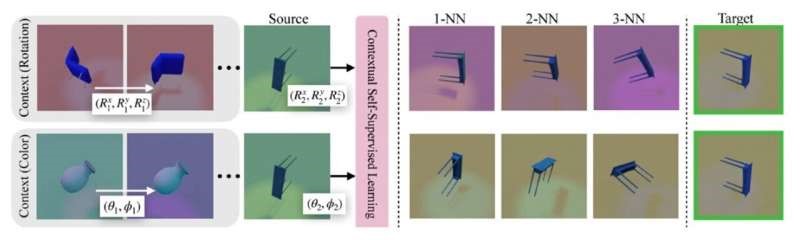

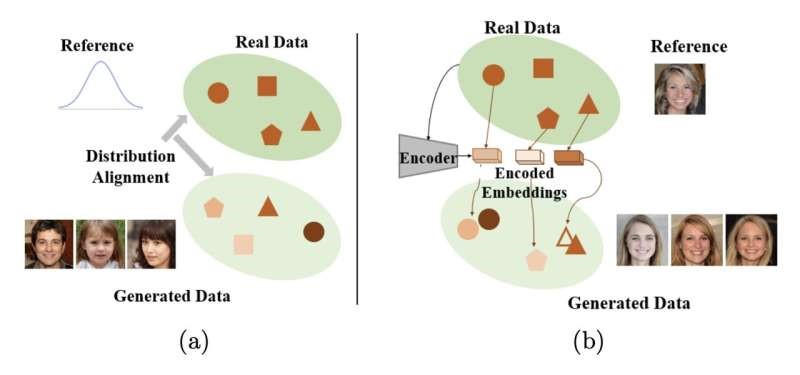

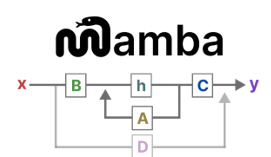

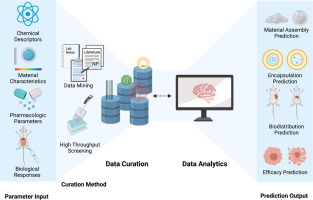

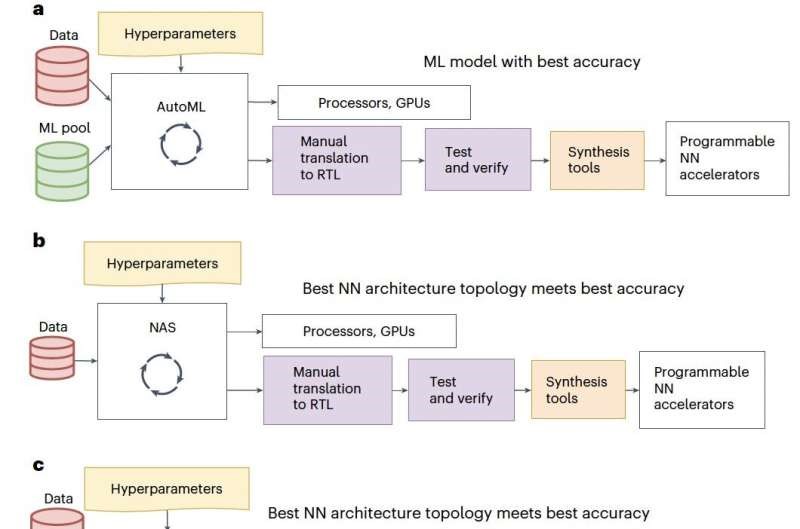

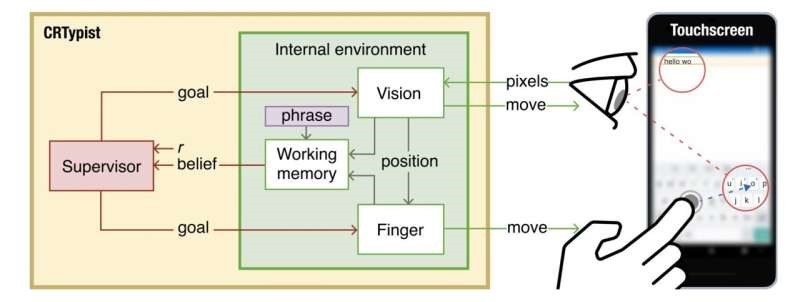

The new approach, developed by researchers at the UNIST Graduate School of Artificial Intelligence and reported by Tech Xplore, shifts the focus from model internals to the training data itself. Instead of probing layers and activations, the method uses large language models to generate detailed, human-readable descriptions of training images. These descriptions are then evaluated for their impact on model predictions using a novel metric called Influence Scores for Texts (IFT).

IFT measures both:

• how strongly each description affects the model’s accuracy

• how well the description aligns with the image content

The result is a clearer, more interpretable map of what AI models actually learn and prioritize.

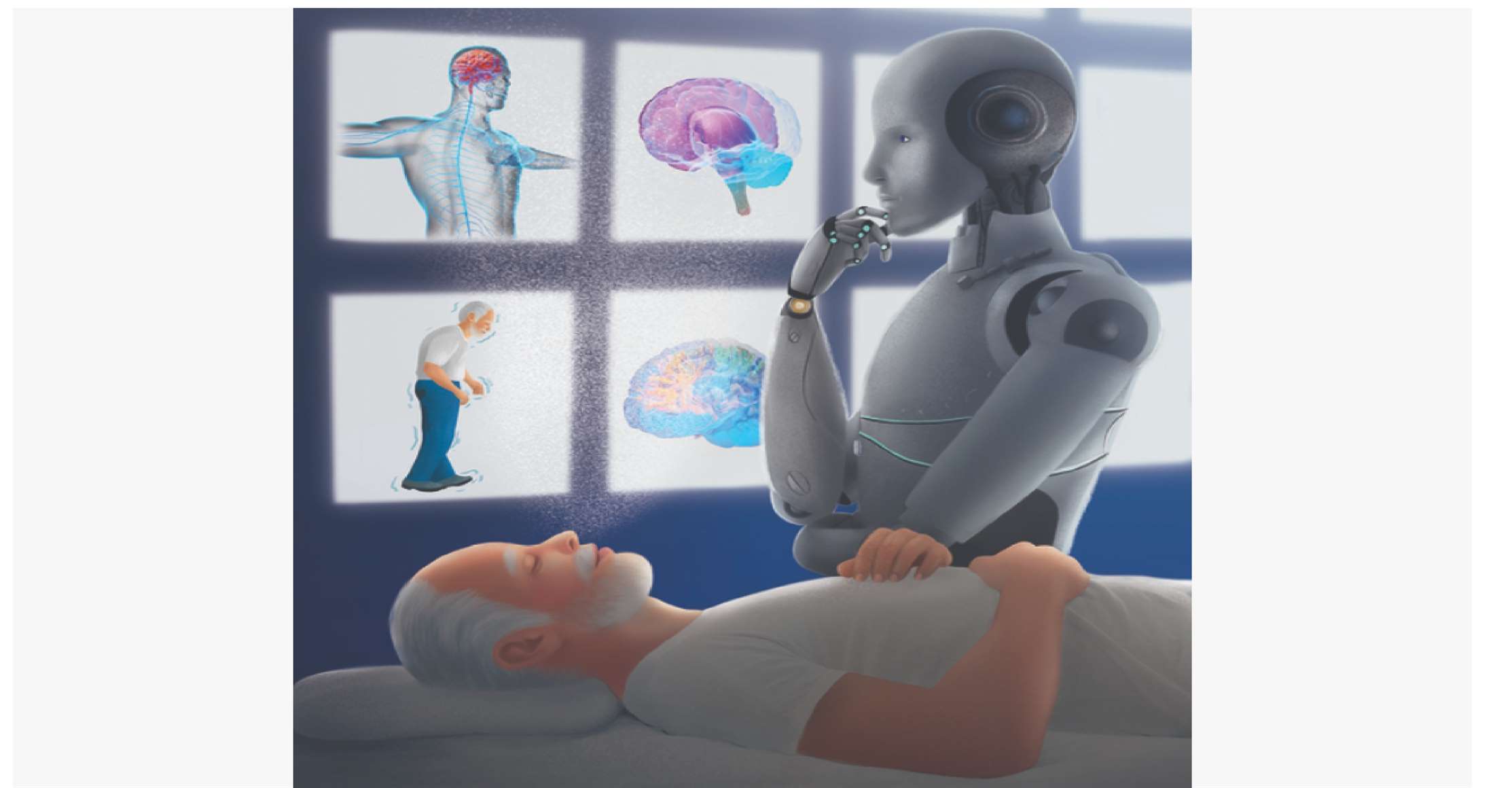

This technique has the potential to improve AI transparency across domains—from healthcare diagnostics to autonomous systems—by helping developers understand and validate how models use their training data. As the AI community increasingly calls for safer and more accountable systems, human-readable data interpretation represents a promising step toward trustworthy AI.