- 20-06-2025

- Artificial Intelligence

A newly developed AI pruning technique removes up to 90% of parameters in deep learning models without sacrificing accuracy—cutting down memory use, computation, and energy demands.

A recent breakthrough in artificial intelligence has introduced a powerful pruning method that significantly reduces the size and resource demands of deep learning systems—without affecting performance.

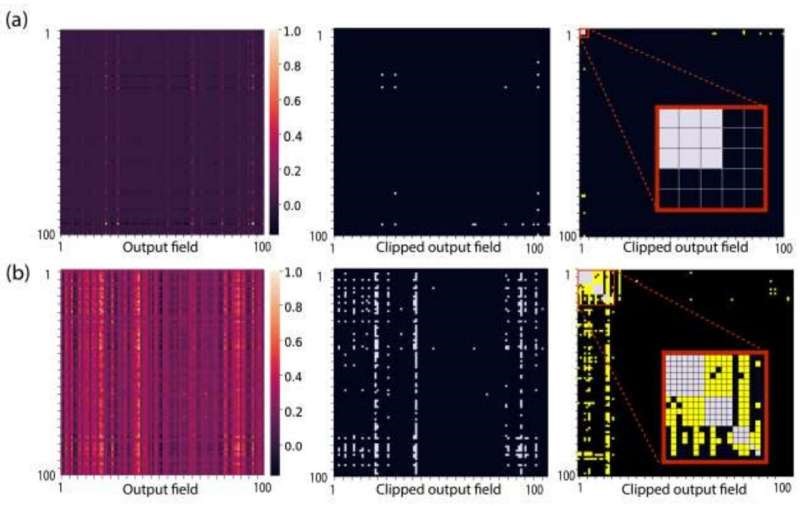

By analyzing how neural networks learn and identifying which parameters are essential, researchers have shown that it's possible to remove up to 90% of parameters in certain layers while maintaining full model accuracy. This makes AI models dramatically lighter, faster, and more energy-efficient.

Unlike earlier approaches that offered limited gains or reduced reliability, this method maintains model performance and enables deployment on smaller, lower-power hardware. It offers a clear path toward more sustainable and scalable AI, especially in mobile devices, embedded systems, or energy-constrained environments.

This development represents a major advancement in the move toward green AI, improving the efficiency and accessibility of intelligent systems across industries. By reducing computational overhead without compromising results, AI can now be smarter, leaner, and more aligned with real-world demands.