- 06-12-2024

- AI

University of Texas researchers developed a groundbreaking AI that converts audio recordings into accurate street images, demonstrating AI’s connection between sound and sight.

Researchers at the University of Texas at Austin have developed a groundbreaking AI model that can convert audio recordings into accurate street-view images. The team trained this model using paired audio and visual data from various urban and rural locations across North America, Asia, and Europe. They used 10-second audio clips from YouTube videos of different cities and corresponding image stills to train the model, which was then able to generate high-resolution images from new audio inputs.

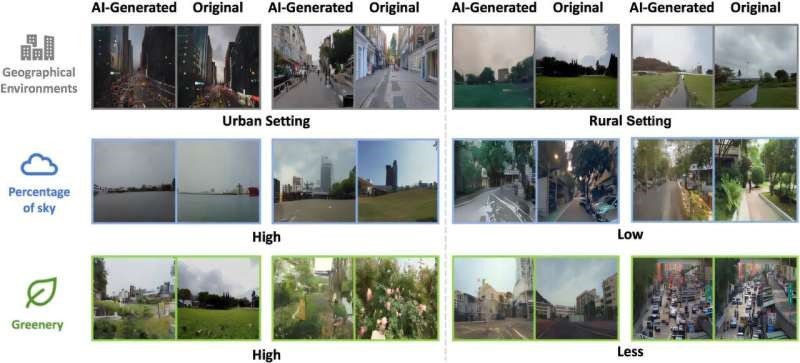

The AI-generated images showed strong correlations with real-world images in terms of the proportions of key elements like sky, greenery, and buildings. Human judges were able to match the generated images to their corresponding audio clips with an accuracy rate of 80%. The model also maintained architectural styles and the spatial arrangement of objects, reflecting the time of day based on environmental sounds like traffic noise or insect chirping.

This research demonstrates that AI can replicate a human-like ability to associate sound with visual environments, highlighting the potential for machines to approximate the sensory experiences humans have when interacting with their surroundings. The study not only advances our understanding of AI’s capability to recognize and replicate physical environments but also offers new insights into how multisensory factors influence our perception of place.