- 23-01-2026

- Computer Vision

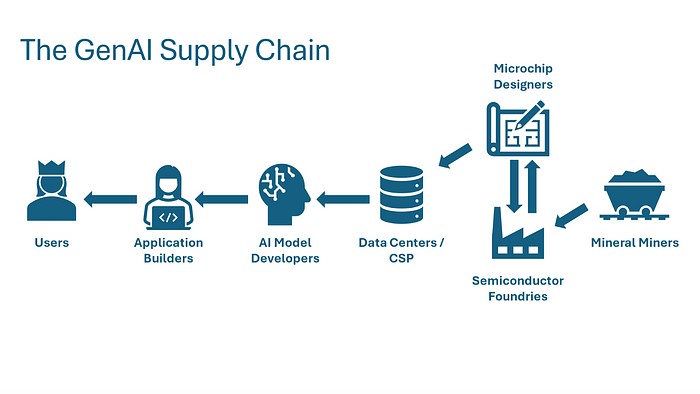

As computer vision moves into more advanced tasks, traditional real-world data collection is struggling to keep up. Experts warn that by 2026, relying solely on real-world footage won’t meet the demands of next-generation AI systems.

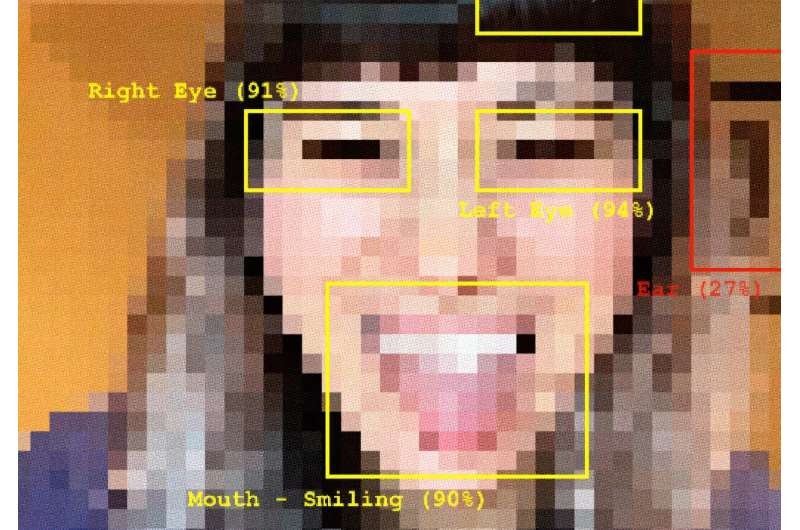

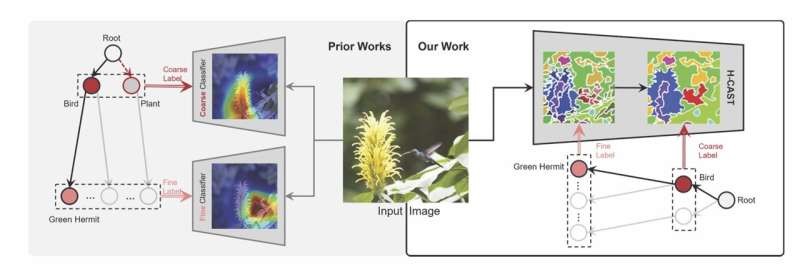

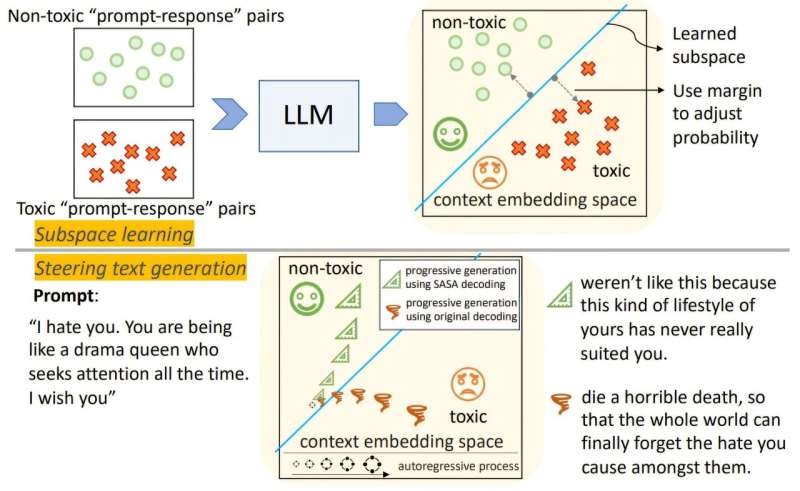

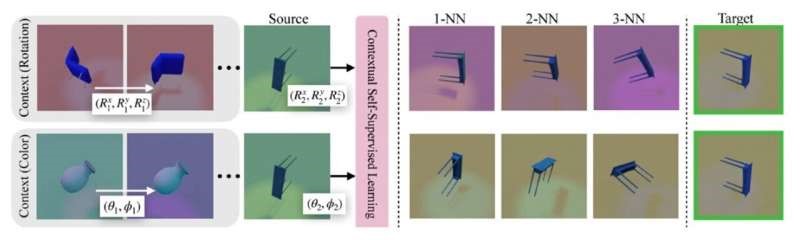

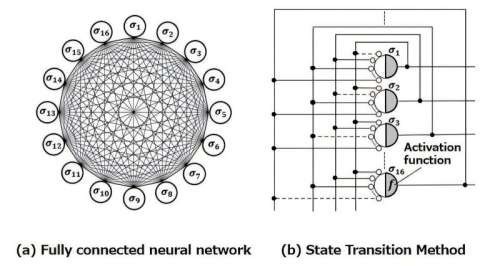

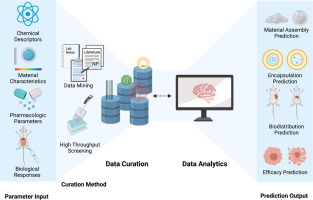

A recent analysis by SKY ENGINE AI highlights critical trends showing why real-world data is running into limits for modern computer vision applications. In 2025, Vision AI is already tackling advanced perception challenges such as contextual understanding, prediction, and multimodal sensing—areas where traditional image and video datasets fall short.

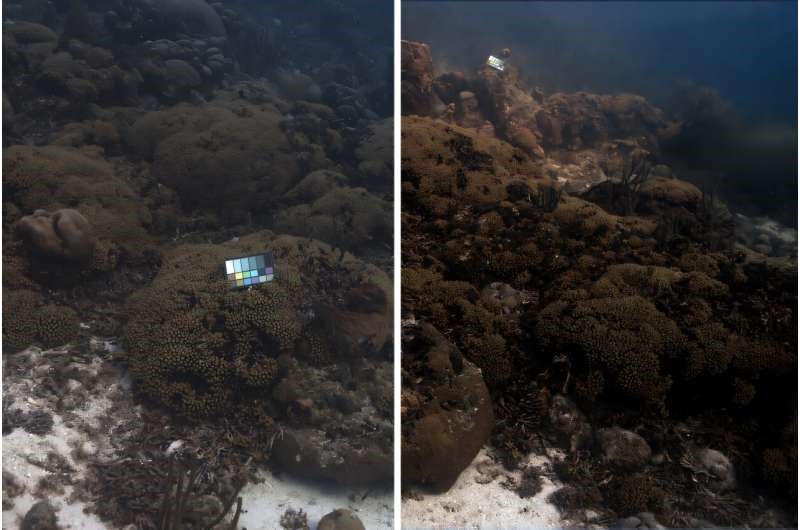

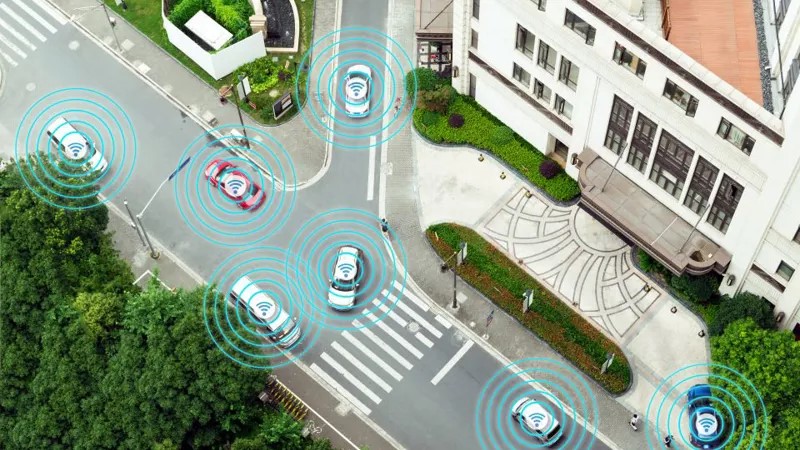

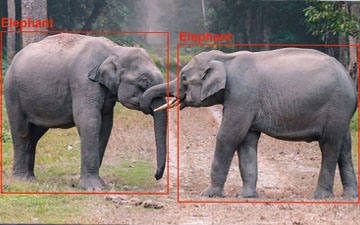

One major reason is that rare or dangerous events—like atypical human behaviors or critical edge cases—are nearly impossible to capture safely and consistently in real life. For AI systems to learn robustly in scenarios like autonomous driving, robotics, or safety monitoring, datasets must include diverse, controlled, and repeatable scenarios that real-world collection simply cannot provide.

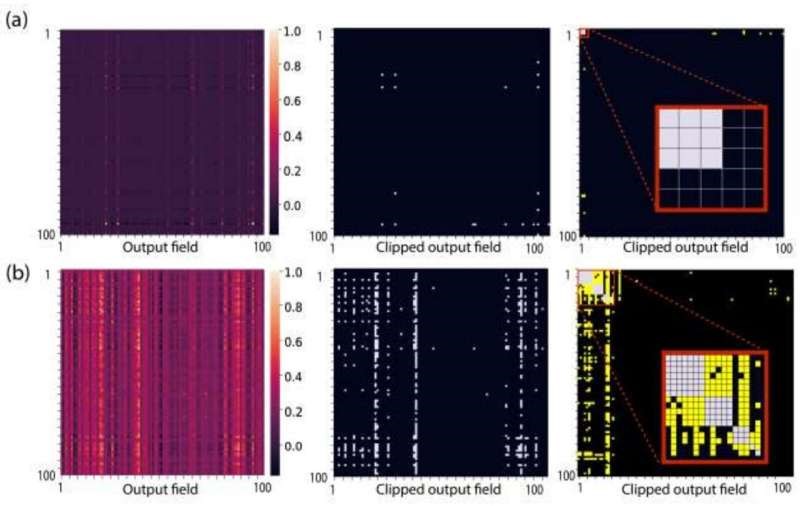

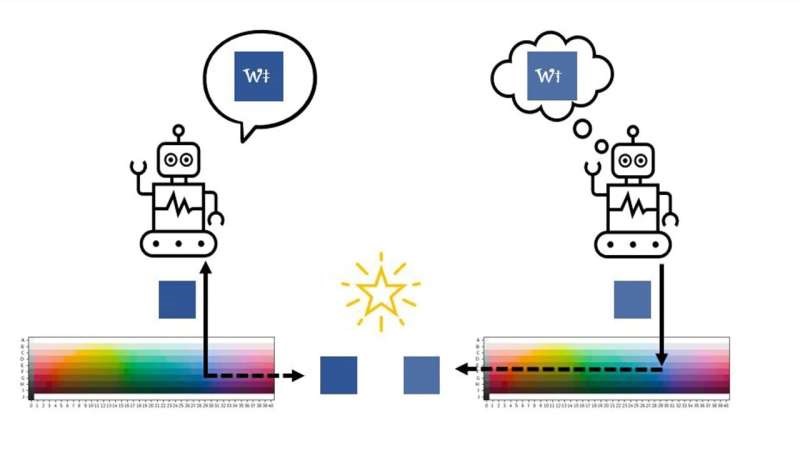

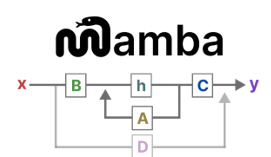

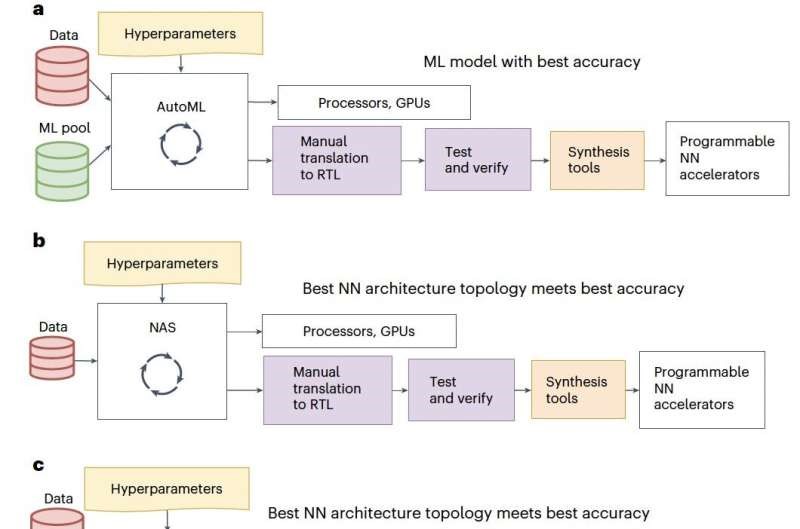

Another accelerating trend is the use of digital twins and virtual sensor replicas—detailed simulations of cameras, LiDAR, radar, and other sensors that mimic real hardware in controlled virtual environments. These simulations allow Vision AI developers to test and train systems in complex scenarios that would be costly or unsafe to collect empirically.

Real-world data collection also struggles with slow cycles and manual labeling bottlenecks, making it difficult for teams to keep pace with fast innovation and evolving requirements from regulators and industry standards.

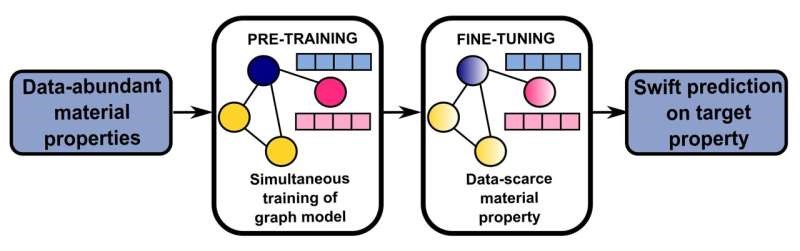

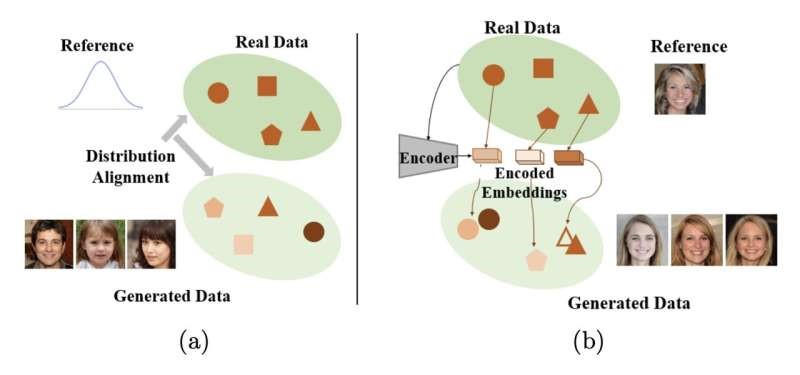

To address these limitations, the industry is increasingly turning to synthetic data, simulation-augmented validation, and multimodal datasets that provide full ground truth, edge-case coverage, and faster development cycles. Analysts forecast that by 2028, most Vision AI models will rely on multimodal training data that real-world footage alone cannot supply.

The implication is clear: as computer vision evolves from basic detection to contextual understanding and prediction, developers will need to embrace synthetic and simulated data if they want accurate, scalable, and safe AI systems in 2026 and beyond.