Artificial Intelligence

“The rise of powerful AI will be either the best, or the worst thing, ever to happen to humanity.” — Stephen Hawking

Intelligent Machines. Something we've seen and used on a daily basis, but we don't realize how futuristic it is (sci-fi movie type). Of course, we don't have robots doing daily tasks like in I, Robot, nor Will Smith saving the world (yet), but the technology we have today is really impressive.

Talking to Alexa, asking her to play your favorite song, seems pretty ordinary today, but a few years ago it was impossible. Speaking of which, Alexa is practically a personification of what artificial intelligence is. Alexa can perform cognitive functions as humans do, such as perceiving, learning, reasoning and solving problems. Or have you never told a joke to her? Or asked the reason of life?

That's the goal of all artificial intelligence, to streamline human effort, and to assist us make better decisions, and improve the way we deal with problems. It can help you complete a very boring and repetitive task, at the same time it can help scientists to deal with complex data which is either impossible to be handled by a human being or it would take a tremendous amount of time to complete.

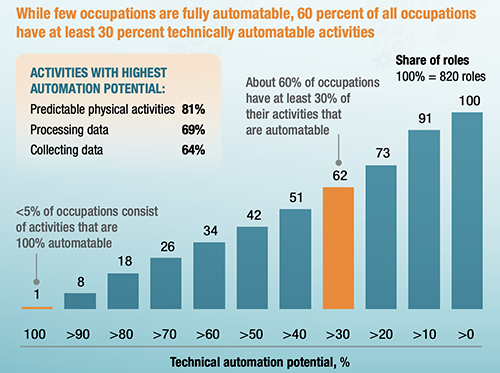

Artificial intelligence is present in almost all industries and areas today. From mobility to healthcare, through education and retail the application of AI has increased immensely. According to McKinsey, AI can automate predictable tasks, and collect/process data. In the United States, these activities make up 51% of activities in the economy, accounting for almost $2.7 trillion in wages.

Automation Potential according to McKinsey Global institute (https://www.mckinsey.com)

As processes are transformed by the automation of individual activities, people will

perform activities that complement the work that machines do, and vice versa.

If we are living the future now, what do we still have for the future? Maybe we're closer to I, Robot and Ex Machina than we thought.

Computer Vision

Object Detection and Classification

Computer vision works in three basic steps: acquiring an image, processing and understanding it. Images, even large sets, can be learned in real-time through video, photos or 3D technology for analysis. Then, deep learning models automate, but the models are often trained by first being fed thousands of labeled or pre-identified images. The final step is the interpretative, where an object is identified or classified.

Face Detection

The goal is to find faces in photos, recognize it nearly instantaneously and finally, to take whatever further action is required, such as allowing access for an approved user. It begins by learning a range of very simple or weak features in each face, that together provide a robust classifier. Then, the models are organized into a hierarchy of increasing complexity.

Face Recognition

In order to perform it, there are two main approaches to face recognition: feature-based methods that use hand-crafted filters to search for and detect faces, and image-based methods that learn holistically how to extract faces from the entire image.

Movement Detection

It follows a few steps to be done: first; the video is segmented into frames, two images (A & B) that were taken back-to-back and converting into gray scale. The following steps would be computing a difference between these two gray scale images and if significant difference is detected between these A & B, it can conclude that some movement has occurred.

Convolutional Neural Network (CNN)

One of the main components of computer vision applications, CNN is great for capturing patterns in multidimensional spaces. Every convolutional neural network is composed of one or several convolutional layers, which it is composed of several filters. Each filter has different values and extracts different features from the input image. Moving deeper into the network, the layers will detect complicated objects such as cars, trees, and people.

Deep Learning

Deep Learning is a subset of machine learning that's based on artificial neural networks. The learning process is deep because the structure of artificial neural networks consists of multiple input, output, and hidden layers. Each layer contains units that transform the input data into information that the next layer can use for a certain predictive task. Thanks to this structure, a machine can learn through its own data processing.

Kernel

Based on the fundamental concept of defining similarities between objects, Kernel methods allows the prediction of properties of new objects based on the properties of known ones or the identification of common subspaces or subgroups in otherwise unstructured data collections, for example.

Pooling

Most convolutional neural networks use pooling layers to keep the most prominent parts and gradually reduce the size of the data. Besides, pooling layers enable CNNs to generalize their capabilities and be less sensitive to the displacement of objects across images.

Padding

Padding works by extending the area of which a convolutional neural network processes an image. Adding it to an image processed by a CNN allows for a more accurate analysis of images.

Natural Language Processing

Speech Recognition

Apple's Siri, Amazon's Alexa and chatbots are examples of real-world applications. They use speech recognition or typed text entries to recognize patterns in voice commands and natural language generation to respond with appropriate action or helpful comments.

Sentiment Analysis

In business, it uncovers hidden data insights from social media channels. Sentiment analysis identifies emotions in text and classifies opinions as positive, negative, or neutral about products, promotions, and events–information. For example, you could analyze tweets mentioning your brand in real-time and detect comments from angry customers right away.

Language Detection

It will be able to predict the given language which is a solution for many AI applications and computational linguists. They are widely used in electronic devices such as mobiles, laptops, and also on robots. Besides, language detection helps in tracking and identifying multilingual documents too.

Transcription (Speech-to-text)

The objective of this system is to extract, characterize and recognize the information about speech. Also known as STT, it gets the “audio data” and then tries to identify patterns and then come up with a conclusion that is text. One of the silver linings would be accuracy rates of greater than 96% and typical search queries with latency of just 50 milliseconds.

Translation

When you translate a sentence in Google Translate, you are using NLP. Language translation is more complex than a simple word-to-word replacement method. It requires grammar and context to fully understand one sentence. And NLP addresses it by processing the text in the input string and maps it with language to translate it on the fly.

Voice Synthesizing (Text-to-Speech)

Text To Speech (TTS) system aims to convert natural language into speech. The field has come a long way over the past few years. Google Assistant and Microsoft’s Cortana are smart devices that exemplifies TTS. Another one is the automatization of audio content in news media.

Text Classification

Another example would be text classification, which helps organize unstructured text into categories and automate the process of tagging incoming support tickets and automatically route them to the right person. For companies, it’s a great way to gain insights from customer feedback.

Ambiguity

By definition, ambiguity is essentially referring to sentences that have multiple alternative interpretations. In AI systems, there are different forms relevant to NLP such as Lexical, Syntactic and Semantic. Metonymy and metaphor are other examples. The process of handling ambiguity is called disambiguation.

Synonymity

It can be solved as a two-step problem: candidate generation and synonym detection. In the first one, given a word, there will generate all possible candidates that might be synonyms for the word. The second step can be solved as a classical supervised learning problem.

Tokenization

A common task in NLP, it is the process of breaking down a piece of text into small units called tokens. A token may be a word, part of a word, or just characters like punctuation. Hence, tokenization can be broadly classified into 3 types – word, character, and subword (n-gram characters) tokenization.

Bag of words

A bag-of-words, or BoW for short, is the simplest form of text representation in numbers. It has that name because any information about the order or the structure of words in the document is discarded. The model is only concerned with whether known words occur in the document, not where they occur in the document.

Embedding

Machine Learning approaches towards NLP require words to be expressed in vector form, often tens or hundreds of dimensions. This is in contrast to the thousands or millions of dimensions required for sparse word representations. In many cases, vector representation of complex entities beyond words is required for certain tasks such as vectors for Sentence, Paragraph and Documents.